Neural Network: Forward and Backward Pass, Example

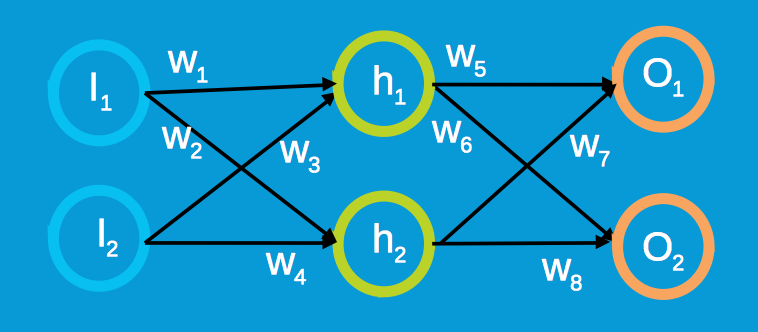

We consider a neural network with two inputs, one hidden layer with two neurons and two output neurons. The architecture is as showed in the following.

A Neural Network with two inputs, one hidden layer with two neurons and one two-neuron output layer.

The Forward Pass

The hidden layer:

$$

h_1=w_1*i_1+w_2*i_2

$$

$$

h_2=w_3*i_1+w_4*i_2

$$

The output layer:

$$

o_1=w_5*h_1+w_6*h_2

$$

$$

o_2=w_7*h_1+w_8*h_2

$$

We use the squared error

$$

E=E_{01}+E_{02}=\frac{1}{2}(y_1-o_1)^2+\frac{1}{2}(y_2-o_2)^2

$$

The Backward Pass

The output layer:

$$

\frac{\partial E}{\partial w_5}=\frac{\partial E}{\partial o_1}*\frac{\partial o_1}{\partial w_5}

$$

$$

\frac{\partial E}{\partial w_6}=\frac{\partial E}{\partial o_1}*\frac{\partial o_1}{\partial w_6}

$$

$$

\frac{\partial E}{\partial w_7}=\frac{\partial E}{\partial o_2}*\frac{\partial o_2}{\partial w_7}

$$

$$

\frac{\partial E}{\partial w_8}=\frac{\partial E}{\partial o_2}*\frac{\partial o_2}{\partial w_8}

$$

The hidden layer:

$$

\frac{\partial E}{\partial w_1}=(\frac{\partial E}{\partial o_1}*\frac{\partial o_1}{\partial h_1}+\frac{\partial E}{\partial o_2}*\frac{\partial o_2}{\partial h_1})*\frac{\partial h_1}{\partial w_1}

$$

$$

\frac{\partial E}{\partial w_2}=(\frac{\partial E}{\partial o_1}*\frac{\partial o_1}{\partial h_1}+\frac{\partial E}{\partial o_2}*\frac{\partial o_2}{\partial h_1})*\frac{\partial h_1}{\partial w_2}

$$

$$

\frac{\partial E}{\partial w_3}=(\frac{\partial E}{\partial o_1}*\frac{\partial o_1}{\partial h_2}+\frac{\partial E}{\partial o_2}*\frac{\partial o_2}{\partial h_2})*\frac{\partial h_2}{\partial w_3}

$$

$$

\frac{\partial E}{\partial w_4}=(\frac{\partial E}{\partial o_1}*\frac{\partial o_1}{\partial h_2}+\frac{\partial E}{\partial o_2}*\frac{\partial o_2}{\partial h_2})*\frac{\partial h_2}{\partial w_4}

$$

comments powered by Disqus